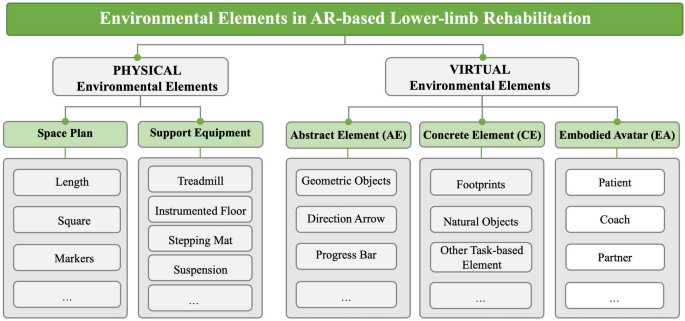

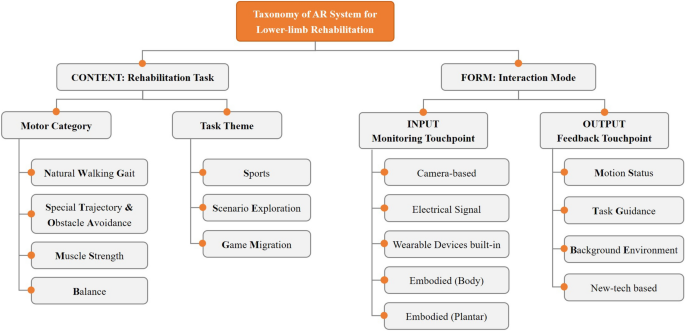

A primary focus of our analysis was the integration of physical and virtual environmental elements within AR systems for lower-limb rehabilitation training. We proposed a reference framework (Fig. 2) to optimize the coordination of these elements within the AR environment and bolster support for lower-limb rehabilitation (addressing RQ1). We also developed a taxonomy of AR systems tailored to lower-limb rehabilitation (Fig. 3), identifying rehabilitation tasks and interaction modes as the two primary design dimensions guiding the design of AR environments (addressing RQ2). The 25 selected studies were structured around the taxonomy’s sub-dimensions, focusing on motor categories, task themes, and the detailed content of the rehabilitation tasks, as well as on monitoring and feedback devices, information types, and sensory modalities underpinning interaction modes. Drawing on insights from both the framework and the taxonomy, we explored strategies to utilize these structured elements to improve engagement in lower-limb rehabilitation (addressing RQ3). The results of data extraction from the 25 studies are summarized in Table 1. We examined how various environmental elements contribute to enhancing the rehabilitation training experience, particularly by optimizing rehabilitation tasks and interaction modes.

Environmental Elements in AR-based Lower-limb Rehabilitation

Overview of the Taxonomy of AR Systems for Lower-limb Rehabilitation

Across the 25 studies, 452 participants (and 18 to 83 years; both male and female) participated in AR-based rehabilitation settings. Their physical conditions ranged from healthy individuals to patients with stroke, Parkinson’s disease, and multiple sclerosis.

Physical and virtual elements for AR environment design in lower-limb rehabilitation (RQ1)

This scoping review further examined the physical and virtual environmental components of AR environments across 25 studies and proposed a structured framework to facilitate task execution and optimize interaction experiences in lower-limb rehabilitation. AR environments are fundamentally divided into physical and virtual segments (Fig. 2). The physical environment refers to the tangible spatial setting in which rehabilitation activities are conducted, encompassing essential physical infrastructures. Various physical environmental elements, including temperature, light, color choices, flooring materials, and artistic landscapes, have been considered to optimize these surroundings [54]. Conversely, the virtual environment encompasses digital overlays provided by AR systems, which enrich the real-world settings with rehabilitation-focused information. The effective design of AR-based rehabilitation scenarios depends on the seamless integration of both physical and virtual components to enhance the overall quality and impact of the rehabilitation process.

Physical environmental elements in AR environments typically comprise two main components: space planning and support equipment. To accommodate diverse movements and rehabilitation-task themes, the design of AR environments must integrate physical features that facilitate training. For straightforward walking tasks, space planning generally required a linear area ranging from 10 to 25 m in length [31, 34, 36, 38]. More complex tasks necessitate dedicated square spaces with dimensions tailored to the scenario. For example, a 3 × 5-m space was used for a “gold ingot treasure hunt” [44], while a 14 × 4-m area was required for a slalom course featuring virtual lamps [41]. The strategic arrangement of markers within these spaces is crucial for defining AR environments [45, 46]. Certain rehabilitation exercises also mandate specialized support equipment, ranging from treadmills for gait exercises [37] to instrumented surfaces for stepping tasks [30, 31], and to suspension systems for sports-themed activities [50].

Virtual environmental elements are categorized into three types: abstract elements, concrete elements, and embodied avatars, each playing a role in providing effective interactive feedback. Virtual content should be tailored to the scenario’s requirements to enhance user engagement and task performance. Abstract elements utilize basic visual components to guide rehabilitation movements, including geometric shapes such as lines, surfaces, and bodies [29,30,31, 34, 35, 38]. These components also include interface symbols such as directional arrows [41, 42] and progress indicators [53] that guide and inform users during tasks. Concrete elements provide more tangible and contextually rich cues in the AR environment. Notable examples included virtual footprints for gait guidance [5, 55], and representations of natural elements such as plants, rivers, and birds [36, 37, 51], which have been demonstrated to subtly enhance the rehabilitation experience [56]. Embodied Avatars serve a variety of roles in delivering intuitive training guidance. These avatars can represent patients with enhanced performance capabilities [43, 46, 49], act as virtual coaches for action modeling [47], or serve as companions offering emotional encouragement and support [32]. A notable application was the introduction of an exoskeleton avatar, which visualized both the patient’s intended motion (as represented by a motion intention avatar) [58] and the actual motion trajectory of the exoskeleton [57]. By displaying these elements side by side, the system enables patients to make timely adjustments to their lower-limb movements based on comparative feedback.

Key factors guiding the design of AR environment tailored for lower-limb rehabilitation

The design of AR environments for lower-limb rehabilitation requires careful consideration of both physical and virtual components to meet diverse therapeutic needs. Figure 3 presents a taxonomy of AR systems for lower-limb rehabilitation, highlighting two primary dimensions: rehabilitation tasks and interaction modes. Figure 2 provides a reference for identifying key environmental factors that contribute to engaging, context-appropriate, and personalized rehabilitation experiences.

Rehabilitation tasks performed in AR environment (RQ2-1)

To understand how AR environments were constructed and presented for rehabilitation, both the motor categories and task themes of the included studies were examined. First, rehabilitation tasks were classified based on the core lower-limb movements and postures targeted within AR settings. Simultaneously, tasks were categorized according to their thematic context to identify the most common training scenarios used to engage patients.

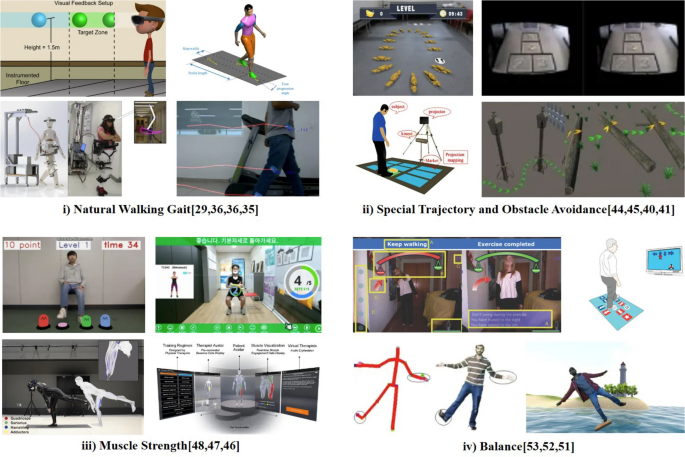

Motor category: Analysis revealed four primary lower limb motor categories: natural walking gait (NWG) (n = 10), special trajectory and obstacle avoidance (ST&OA) (n = 6), muscle strength (MS) (n = 3), and balance (B) (n = 5). Figure 4 illustrates representative examples of these categories. NWG and ST&OA tasks aim to practice everyday gait patterns and navigational skills; MS tasks focus on developing foundational strength necessary for mobility; and B tasks target improvements in postural stability. The following descriptions highlight the characteristics of these four motor categories, including the typical rehabilitation tasks and key physical and virtual elements integrated within the AR systems.

Motor Categories in Existing Research

i) Natural Walking Gait (NWG): Restoring a natural walking gait is a primary goal of lower-limb rehabilitation. AR environments commonly incorporated physical equipment such as treadmills [37] and instrumented floors [30] to facilitate gait training. However, notable differences between treadmill walking and overground walking [59] might limit the transfer of training effects to real-world contexts [24]. As an alternative, some systems used natural ground environments combined with virtual feedback projected directly onto the floor surface [40]. This approach enabled overground practice but may cause discomfort or instability if patients were required to look downward continuously during movement [36]. Another AR application visualized and guided gait for patients using assistive exoskeletons [29, 34]. In those cases, joint angle data from the exoskeleton were integrated into the AR system to provide real-time feedback on gait performance.

ii) Special Trajectory and Obstacle Avoidance (ST&OA): Beyond basic gait training, many advanced tasks involved navigating special trajectories or avoiding obstacles. Physical obstacle courses posed safety risks and were often resource-intensive to implement, especially for patients with limited mobility. AR provided a safer and more adaptable alternative for ST&OA training by overlaying virtual pathways and obstacles onto the real world. For example, virtual circular pathways on the ground were used to improve turning ability in patients [44], and an AR hopscotch grid allowed for precise stepping practice [45]. Virtual obstacles such as approaching objects and simulated holes create interactive trajectories without exposing patients to real tripping hazards. Held et al. [41] developed an AR parkour scenario where patients stepped on virtual stones to cross a river and the scenario adjusted its difficulty (e.g., stone spacing) based on patient performance [40].

iii) Muscle Strength (MS): Strengthening key muscle groups is essential during the early phase of rehabilitation, particularly for patients with severe lower-limb injuries, as sufficient muscle force is necessary to support walking exercises. This phase can be challenging due to pain [60] and decreased motivation [61]. AR systems enhanced patient self-efficacy during strength training [47] by providing visual feedback and motivational elements. To ensure correct muscle activation without continuous therapist supervision, some systems were combined with support tools (e.g., yoga mats [46] or chairs [48]) and sensors that detected muscle tension or activation levels [46]. For instance, visual overlays highlighted the specific muscle group intended for contraction, helping patients adjust their movements independently and accurately.

iv) Balance (B): Balance training is essential for preventing falls and secondary injuries, particularly among older adults and individuals with lower-limb impairments. AR environments have been used to enhance engagement by guiding patients along straight or curved paths and visualizing progress in real time [53]. Janssen et al. [42] incorporated virtual targets for 180° turning, which adapted to real-time performance and supported patients in overcoming gait freezing episodes. Hoang et al. [52] combined AR with a sensory stepping mat to train directional stepping, thereby promoting accurate foot placement. Moreover, exercises that may be perceived as monotonous in traditional therapy (e.g., repeated hip abduction) have been transformed into engaging AR scenarios. For example, patients practiced balance by standing on a virtual surfboard or plank suspended over water [51], broadening their range of motion and enhancing motivation.

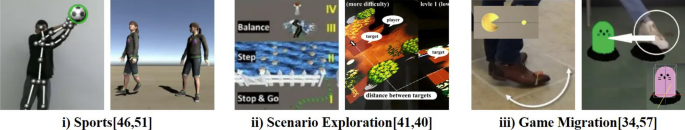

Task theme: More than half of the included studies (n = 13) replicated conventional rehabilitation exercises in AR with minimal thematic variation, resulting in a relatively rigid integration of virtual elements. In contrast, the remaining studies employed creative task themes that more effectively integrated physical and virtual environments, enriching the user experience. Across all 25 studies, we identified three task‑theme categories: sports(S) (n = 3), scenario exploration (SE) (n = 3) and game migration (GM) (n = 5). Figure 5 presents representative examples of each category. The key features and representative cases of each task theme are described below.

Task Themes in Existing Research

i) Sports (S): Sports-themed AR scenarios create virtual versions of outdoor sports and games for rehabilitation. By assigning patients an active role in a sports context, these themes leverage natural body movements and the inherent motivational appeal of competition. For instance, Chen et al. [50] developed an AR stimulation of an open-air football game, in which patients acted as goalkeepers attempting to save virtual footballs arriving from various directions. The scenario included a virtual audience with cheering sounds effects to encourage participation and increase immersion. Desai et al. [51] created an AR exercise modeled on a water-based balance plank challenge, motivating patients to perform one-legged standing movements to maintain balance on a virtual plank. Similarly, Banaga et al. [49] developed a dance exergame in an AR setting for stroke rehabilitation, aiming to improve both cognitive and motor functions while fostering a sense of control even within confined spaces.

ii) Scenario Exploration (SE): These themes encourage patients to interact with the AR environment in an exploratory and open-ended manner, offering a personalized and immersive experience. Held et al. [41] constructed a forest-themed parkour adventure in AR, in which users followed continuously appearing arrows to navigate tasks such as crossing jungle branches and weaving through streetlights. Wang et al. [44] designed an AR scenario called “Treasure Island Adventure” for elderly patients with Parkinson’s disease. In this scenario, users followed a trail of virtual gold ingots to collect treasures while avoiding traps, helping patients feel confident attempting movements they previously considered potentially dangerous. Amiri et al. [40] developed a garden exploration scenario using spatial projection mapping, where patients interacted with location-specific bricks to reveal flowers and vegetation, thereby enhancing engagement compared to the typical Square-Stepping Exercise.

iii) Game Migration (GM): This approach extracts elements from popular games and integrates them into rehabilitation, effectively “gamifying” [62] the exercises to boost engagement and reduce cognitive load for patients. Tokuyama et al. [48] migrated the whack-A-Mole game into an AR environment for lower-limb strength training, using Microsoft Kinect to guide users in stepping motions that interacted with the game. Janssen et al. [42] incorporated elements of Pac-Man into an AR turning exercise, in which patients followed virtual pellets (dots) to practice 180° turns, with mixed results on turn-speed improvement. Hoang et al. [52] adapted arcade dance machine mechanics for balance training in older adults, using directional arrow cues and rhythmic music to increase motivation and deliver immediate performance feedback.

Interaction modes provided by AR environments (RQ2-2)

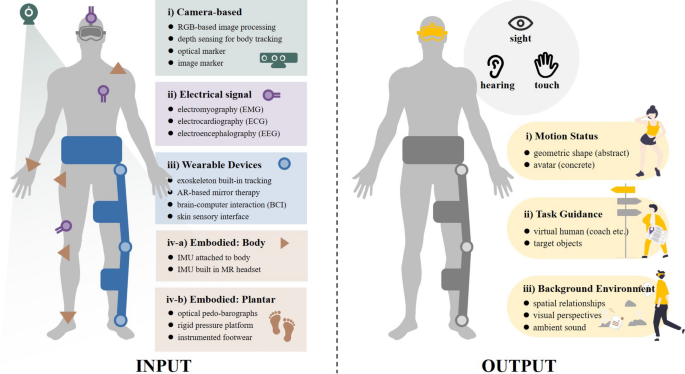

In AR environments designed for lower-limb rehabilitation, interaction modes are pivotal for creating an immersive and responsive experience, and focus on two types of touchpoints: information input and output. Input touchpoints are facilitated by monitoring sensors that capture key user data, such as movement patterns or physiological signals. Conversely, output touchpoints deliver feedback through multiple sensory modalities. Based on the included 25 papers, we systematically analyzed how current AR systems implement these touchpoints and how information flows between them. We also explored potential interaction modes enabled by emerging technologies. Figure 6 illustrates these interaction touchpoints, with a particular focus on the integration of assistive devices such as exoskeletons within the AR rehabilitation framework.

Input and Output Touchpoints in the AR-based Lower-Limb Rehabilitation System

INPUT: monitoring touchpoints

To explore the interaction possibilities between patients and the AR environment, we first examined the input mechanisms. AR systems in the reviewed studies supported a diverse array of sensors [63, 64], providing various monitoring touchpoints that captured distinct aspects of body movement during rehabilitation. These monitoring touchpoints were classified into five main types: camera-based (n = 15), electrical signals (n = 2), wearable-device embedded (n = 2), body-attached motion sensors (n = 8), and plantar pressure sensors (n = 2) (see Fig. 6, left). The following subsections describe each type of monitoring touchpoint used in AR settings.

i) Camera-based touchpoints: These utilized optical sensors such as RGB or depth cameras to track overall posture and limb trajectories. The Microsoft Kinect (V1 or V2) was the most frequently employed camera in current research [37, 40, 43, 45, 48, 50, 51, 53], offering reliable markerless motion capture via its RGB camera and infrared depth sensor.

ii) Electrical signal monitoring touchpoints: These systems monitored physiological parameters in real-time during rehabilitation training. Among the common signals, electromyography (EMG), electrocardiogram (ECG), and electroencephalogram (EEG), EEG has emerged as a novel method to capture a patient’s motor intentions directly from brain activity. Several studies have integrated EEG cap devices to monitor patients’ movement intentions or mental engagement, effectively incorporating a brain–computer interface into the AR rehabilitation system [29, 33].

iii) Wearable devices-built-in touchpoints: Sensors embedded in wearable rehabilitation devices, such as robotic exoskeletons, provided another input modality. These touchpoints can automatically monitor and even adjusted training exercises, representing a new paradigm of device-assisted rehabilitation [65, 66]. For example, sensors installed at joints or pressure points of a lower-limb exoskeleton can record joint angles, forces, and torques [34], thereby enabling the AR system to adapt feedback in real time as the exoskeleton moved through rehabilitation exercises.

Beyond these conventional interaction modes of monitoring and feedback modes, several studies have explored innovative interaction modes enabled by emerging technologies, opening new avenues for engaging rehabilitation practices. Examples include enhanced mirror therapy, brain-computer interfaces, and skin-based sensory feedback:

-

Mirror Therapy in AR: Conventional mirror therapy reflects movements of the healthy limb to create the illusion that the affected limb is functioning normally [67]. In contrast, AR provides novel enhancements to mirror therapy. Unberath et al. [68] combined a physical mirror with an HMD-based AR system to stabilize limbs and allow observation of static movements, thereby creating new opportunities for cortical reorganization and improved functional mobility. Similarly, Desai et al. [51] employed avatars in an AR environment to replicate real-time rehabilitation movements, enabling patients to engage in more dynamic tasks and fostering a greater sense of achievement.

-

Brain-Computer Interface (BCI): BCIs integrate hardware and software to translate neural activity into system commands [69]. Wang et al. [29] proposed a portable steady-state visual evoked potential-based BCI (SSVEP-BCI) system specifically designed for use within AR rehabilitation exoskeletons. Unlike traditional BCIs that rely on stationary screen-based stimuli, AR HMDs can provide real-time visual feedback directly aligned with patient movement, facilitating novel active–passive hybrid training modes.

-

Skin Sensory Interface: Many AR applications depend on HMD for visual and auditory feedback, but the bulk and discomfort associated with prolonged use pose challenges, especially for individuals with lower-limb injuries [70]. Advances in nanomaterials and flexible wearable sensors, including those for pressure, strain, acoustic and physiological signals (e.g., electrooculography – EOG and EEG), pave the way for lighter, more comfortable haptic input methods. Soft AR contact lenses and actuators capable of delivering force, thermal, and vibrational feedback introduce innovative skin sensory interfaces (e-skin), thereby enhancing the user experience through novel output modalities [71].

iv) Embodied Touchpoints: Inertial and pressure sensors attached to the patient’s body also serve as “embodied” monitoring touchpoints.

-

Inertial Measurement Units (IMU): Positioned on various body segments, IMUs capture real-time acceleration data, thereby facilitating the collection of kinematic information such as velocity and displacement. Commercial IMU products are lightweight, modular, and cost-effective, allowing their widespread application across diverse research initiatives [41, 42]. In addition, some AR HMDs (e.g., HoloLens) also have built-in IMU modules to monitor both head movements and body posture in conjunction with gait analysis algorithms [38].

-

Plantar Touchpoints: These sensors monitor the interaction between the feet of individuals with lower-limb injuries and the ground or exoskeletal plantar attachments. Utilizing array pressure-sensing sensors [30, 31], these touchpoints record motion data, including plantar pressure distribution and center of gravity shifts, which are essential for tailoring rehabilitation feedback to individual gait patterns.

OUTPUT: feedback touchpoints

This section examines the output mechanisms of AR environments, with a specific focus on feedback touchpoints, as analyzed across the 25 included studies. AR environments are capable of delivering various forms of augmented feedback to patients. Most studies employed MR or VR headset devices (n = 13), followed by video streams on computer or TV screens (n = 6), and spatial projections (n = 4). Feedback content was typically categorized into three types: motion status (MS) (n = 10), task guidance (TG) (n = 20) and background environment (BE) (n = 14) (see Fig. 6—right). The following sections describe how each category was implemented in AR environments for lower-limb rehabilitation:

i) Motion Status: Real-time feedback on motion status is critical for helping patients verify and correct their movements during rehabilitation. AR environments have facilitated this by enabling patients to intuitively understand and verify the accuracy of their training movements. Existing studies have often utilized abstract elements or concrete avatars to visualize the motion status, where changes in the size and color of geometric shapes such as circles [31], spheres [30], and cylinders [50] indicate variations in walking speed and posture, aiding patients in adjusting their walking rhythm and speed. For instance, Hurtado et al. [35] reflected real-time movement of three primary joints of the lower limb (hip, knee, and ankle) via three distinct cubes. Hidayah et al. [34] depicted the full trajectory lines of a patient’s foot points through a gait cycle. Zhu et al. [46] visualized the real-time status of key muscle groups in the lower limbs using a virtual body with translucent muscle tissues, where variations in color intensity revealed the force exerted by each group, aiding patients in recognizing proper muscle activation and minimizing the risk of secondary injuries. Furthermore, Banaga et al. [49] simplified task execution by having patients perform basic movements, with more complex motor activities represented by energetic avatars, thereby enhancing motor feedback and bolstering the patient’s sense of control over the body. Luchetti et al. [43] constructed a 2D avatar with transparency that can adapt to both first-person and third-person perspectives, offering versatile perspectives for understanding and performing rehabilitation exercises.

ii) Task Guidance: Task guidance provides instructional support for patients as they perform rehabilitation tasks [72]. Many studies have employed virtual coaches or target objects to demonstrate step-by-step movements. Jeon et al. [47] used multi-angle videos of a virtual coach, presented from both frontal and lateral perspectives within the AR environment, to provide intuitive guidance. Miller et al. [32] introduced a realistic walking avatar, positioned ahead of the patient within an HMD-based AR setting. This design leveraged patients’ natural tendency to synchronize their pace with a walking partner, thereby encouraging coordinated movement patterns [55].

Bennour et al. [36] displayed virtual footprints overlaid on the ground to visualize gait parameters such as stride length, stride width, and stride speed. To distinguish movements between left and right limbs, contrasting colors (e.g., red and blue) were used [43, 52], offering more personalized visual cues for bilateral coordination. Wang et al. [44] incorporated virtual cave obstacles into the real-world ground plane, aligning visual elements with users’ cognitive expectations and increasing task engagement. Tokuyama et al. [48] enhanced interactivity by embedding a game-like mechanic in which virtual moles randomly appeared and disappeared, promoting sustained attention throughout the rehabilitation session. Garrido et al. [53] used a virtual balance board to support task guidance, enabling patients to visually assess and adjust their balance, simplifying the concept of balance control.

iii) Background Environment: The background environment provides the contextual and sensory setting for AR-based rehabilitation. Analysis of the 25 included studies highlights three key components: spatial relations, observational perspectives, and ambient sound, as integral to establishing a cohesive and immersive AR experience. These elements support seamless integration between physical and virtual worlds, thereby enhancing the overall rehabilitation process.

-

Spatial Relation: Alignment between physical and virtual spaces is essential in AR environments. Traditional rehabilitation exercises often use horizontal stripes on the floor as navigational aids. Ahn et al. [38] designed these lines with a perspective setting that changes with distance. Virtual elements can be anchored in the environment as either world-locked (environment-relative) or body-locked (user-attached). Guinet et al. [31] noted that body-locked anchors can disrupt task performance due to their variability. Improper overlap or occlusion between virtual elements and the user’s body can disrupt accurate spatial perception. To address this, Sekhavat et al. [37] added virtual contour lines around the edges of the feet, thereby preventing footprint–body occlusion.

-

Observation Perspective: Viewing perspective plays a key role in accurately presenting lower-limb movements, yet is often underemphasized. Hurtado et al. [35] demonstrated that side, frontal, and overhead perspectives are effective for visualizing joint trajectories. Luchetti et al. [43] implemented a third-person view combining frontal and overhead angles, which enhanced patients’ perception of their movement patterns and spatial orientation.

-

Ambient sounds: Auditory cues enrich the sensory experience and contribute to engagement and motivation during rehabilitation. Held et al. [41] incorporated natural sounds, such as bird calls triggered by knee movements, to provide encouraging feedback. Ko et al. [33] implemented collision sounds in virtual object interactions to enhance immersion. Amiri et al. [40] used dynamic sound cues to guide movements, distinguish correct from incorrect actions, and indicate progress by adjusting background audio, thereby reinforcing user motivation.

link